As research continues to address the challenges and explore new frontiers, transfer learning is set to play an increasingly vital role in advancing the capabilities of artificial intelligence and machine learning.

An Introduction to Transfer Learning in Deep Neural Networks

The field of artificial intelligence (AI) has seen tremendous advancements in recent years, with deep neural networks (DNNs) playing a pivotal role in this progress. Among the many techniques that have propelled AI forward, transfer learning stands out as a particularly powerful approach. Transfer learning enables the transfer of knowledge from one task to another, significantly improving the efficiency and effectiveness of training deep neural networks. This article provides an in-depth introduction to transfer learning, exploring its underlying principles, methodologies, and applications.

Understanding Transfer Learning

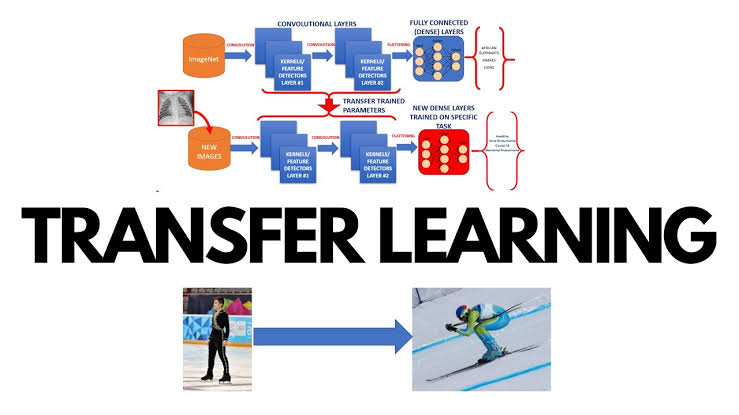

Transfer learning is a machine learning technique where a model developed for one task is reused as the starting point for a model on a second task. It leverages the knowledge gained from solving one problem and applies it to a different but related problem. This approach is especially beneficial when there is a scarcity of labeled data for the target task but an abundance of labeled data for a related task.

The primary motivation behind transfer learning is to make use of the learned features, representations, or parameters from a pre-trained model, thereby reducing the need for extensive data and computational resources. This can lead to faster convergence and improved performance, especially when training deep neural networks.

Key Concepts in Transfer Learning

Several key concepts underpin the practice of transfer learning in deep neural networks. Understanding these concepts is crucial for effectively implementing and leveraging transfer learning.

1. Pre-training and Fine-tuning: In transfer learning, the initial model is pre-trained on a large dataset, often for a related task. This pre-trained model serves as a foundation, providing useful features and representations. Fine-tuning involves further training the pre-trained model on the target dataset, allowing it to adapt to the specific nuances of the new task.

2. Feature Extraction: In many cases, the early layers of a neural network learn general features such as edges, textures, and shapes, while the later layers learn more task-specific features. Transfer learning often involves retaining the early layers (feature extractors) and only fine-tuning the later layers on the target task.

3. Domain Adaptation: Transfer learning can be particularly effective when there are differences between the source and target domains. Domain adaptation techniques help the model adjust to these differences, ensuring that the transferred knowledge remains relevant and useful.

Types of Transfer Learning

Transfer learning can be categorized into several types based on the relationship between the source and target tasks and the manner in which knowledge is transferred.

1. Inductive Transfer Learning: In this type, the source and target tasks are different but related. The primary goal is to improve the target task using knowledge from the source task. An example is using a model trained on a large image classification dataset (such as ImageNet) to fine-tune for a specific image classification task with a smaller dataset.

2. Transductive Transfer Learning: Here, the source and target tasks are the same, but the domains are different. The objective is to apply knowledge from a source domain to improve performance in a target domain. For instance, a sentiment analysis model trained on movie reviews can be adapted for sentiment analysis on product reviews.

3. Unsupervised Transfer Learning: This type involves transferring knowledge from a source task that does not require labeled data to a target task that does. It is particularly useful when labeled data is scarce. For example, using unsupervised learning to pre-train a language model on large text corpora and then fine-tuning it for specific tasks such as sentiment analysis or text classification.

Applications of Transfer Learning

Transfer learning has found applications across a wide range of domains, demonstrating its versatility and effectiveness. Some notable applications include:

1. Natural Language Processing (NLP): Transfer learning has revolutionized NLP by enabling the development of powerful language models such as BERT, GPT, and RoBERTa. These models are pre-trained on massive text corpora and fine-tuned for specific tasks such as sentiment analysis, machine translation, and question answering.

2. Computer Vision: In computer vision, transfer learning has been instrumental in improving image classification, object detection, and segmentation tasks. Pre-trained models on large datasets like ImageNet provide robust feature extractors that can be fine-tuned for specialized applications such as medical imaging and autonomous driving.

3. Healthcare: Transfer learning is being used to enhance diagnostic accuracy and treatment planning in healthcare. For instance, models pre-trained on general medical imaging datasets can be fine-tuned to identify specific diseases, such as cancer or diabetic retinopathy, with high accuracy.

4. Robotics: Transfer learning helps in transferring knowledge from simulated environments to real-world scenarios in robotics. This approach reduces the time and effort required to train robots for complex tasks such as object manipulation and navigation.

5. Finance: In the finance sector, transfer learning is applied to predictive modeling, risk assessment, and anomaly detection. Models trained on historical financial data can be adapted to predict future market trends and detect fraudulent activities.

Challenges and Future Directions

While transfer learning offers numerous benefits, it also presents certain challenges that need to be addressed for its wider adoption and effectiveness.

1. Negative Transfer: One of the primary challenges is negative transfer, where the knowledge from the source task adversely affects the performance on the target task. This can occur when the source and target tasks are not sufficiently related or when the model overfits to the source task's peculiarities.

2. Domain Mismatch: Differences in data distributions between the source and target domains can lead to suboptimal transfer learning performance. Techniques such as domain adaptation and domain generalization are being developed to mitigate this issue.

3. Computational Resources: Fine-tuning large pre-trained models can be computationally expensive, requiring significant resources in terms of hardware and time. Optimizing this process and developing more efficient transfer learning methods is an ongoing area of research.

4. Interpretability: As with many deep learning techniques, the interpretability of transfer learning models remains a challenge. Understanding how and why certain features transfer well between tasks is crucial for building more robust and reliable models.

Looking ahead, the future of transfer learning holds great promise. Research is focused on developing more sophisticated algorithms that can transfer knowledge across diverse tasks and domains more effectively. Additionally, the integration of transfer learning with other advanced AI techniques, such as reinforcement learning and generative models, is expected to unlock new possibilities and applications.

Conclusion

Transfer learning has emerged as a powerful technique in the realm of deep neural networks, enabling the efficient reuse of knowledge across different tasks and domains. By leveraging pre-trained models and fine-tuning them for specific applications, transfer learning significantly reduces the need for large labeled datasets and extensive computational resources. Its applications span various fields, from natural language processing and computer vision to healthcare and finance, showcasing its versatility and impact.