Explore the future of human-computer interaction through computer vision and gesture recognition, enhancing interface design for intuitive and seamless digital experiences.

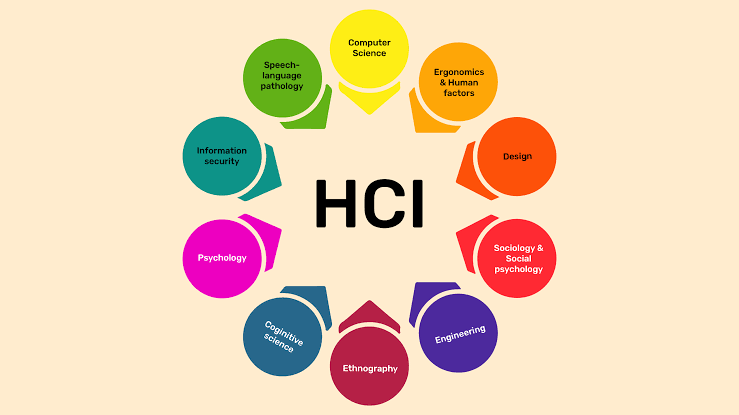

Human-Computer Interaction (HCI) is a field that explores how people engage with technology focusing on the design of interfaces between users and computers. It draws from disciplines like science, psychology, design and more to improve the user experience by making it seamless and effective. Over time HCI has evolved from text based command interfaces to user friendly graphical interfaces. Now to more natural interaction methods such as voice commands, gestures and eye tracking. One significant development in this realm is the application of vision technology for recognizing gestures and creating user interfaces. This innovation opens up possibilities for how we interact with digital platforms.

The Evolution of Gesture Recognition in HCI

Gesture recognition has been a concept in human computer interaction for a while. However with advancements in vision technology it has progressed significantly. In the days early attempts at gesture recognition faced limitations due to hardware constraints and the complexity of capturing and interpreting human movements. Nowadays computer vision technology has reached a stage where systems can effectively track and recognize gestures offering users a more intuitive and fluid way to engage with devices.

One major advantage of interaction is its ability to mimic movements. This helps reduce the learning curve associated with new technologies making digital interfaces more user friendly for individuals of ages and skill levels. In traditional setups involving keyboards users need to familiarize themselves with commands or shortcuts. In contrast gesture recognition allows for task completion through actions like swiping, pinching or pointing. This opens up opportunities for interaction across reality settings, gaming, healthcare and everyday computing activities.

The importance of vision in gesture recognition.

Computer vision technology allows computers to understand and make decisions based on visual information. It works by capturing images or videos and using algorithms to recognize and follow objects like hands, movements or facial expressions. In systems that recognize gestures computer vision analyzes actions in time and compares them to predefined gestures. This enables users to interact with devices digitally without needing physical controllers or touchscreens.

There are various methods employed in computer vision for recognizing gestures. One common method involves utilizing cameras that sense depth such as Microsoft's Kinect or Intel's RealSense to obtain data about a users body. These cameras can measure the distance between the user and the device enabling precise tracking of hand and body movements in three dimensions. Another approach involves applying learning algorithms to teach the system a range of gestures. These algorithms learn to identify patterns in how people move and can adjust to different users movement styles enhancing the systems versatility and user friendliness.

Gesture Recognition in Interface Design

Gesture recognition technology plays a role, in designing interfaces particularly in settings where traditional input methods are impractical or ineffective. For instance in virtual reality (VR) and augmented reality (AR) scenarios relying on keyboards or mice for navigation isn't feasible. Gesture based interfaces offer a more immersive and user friendly approach to engaging with these technologies allowing users to reach out and interact with objects as if they were tangible.

Moreover gesture recognition proves valuable in medical settings where hands free operation is essential. Surgeons, for example can utilize gestures to browse through images during surgeries without the need to touch a keyboard or mouse minimizing contamination risks. Additionally gesture recognition is gaining prominence, in smart homes enabling users to control appliances, lighting and entertainment systems through simple hand motions.

Designers face the task of developing gesture based interfaces that are user friendly and reliable. Although gesture recognition holds promise in making interfaces feel intuitive poorly crafted systems can cause frustration when gestures aren't accurately recognized or when the system is overly responsive to movements. Designers need to find a balance between allowing flexibility to suit users and establishing guidelines on how gestures should be executed.

Challenges and Limitations

While gesture recognition and computer vision present opportunities, for interaction between humans and computers there are still obstacles that need to be overcome. One significant challenge lies in ensuring the precision and dependability of gesture recognition technology. Despite advancements in vision methods factors such as lighting conditions, background interference and the user's positioning can impact the systems ability to accurately monitor movements. For instance in settings it might be challenging for the camera to differentiate between the users hand and the surroundings.

Another hurdle involves developing systems capable of recognizing an array of gestures while minimizing false positives. Users may unintentionally make motions that the system misinterprets as commands resulting in actions. To address this issue designers often implement confirmation steps or create gestures that are distinct and easily recognizable by the system.

Additionally there's the problem of fatigue. Although gesture based interfaces can be user friendly they can also be physically exhausting to use, over periods. For instance keeping your hand raised to operate a device might feel convenient for a duration but it could become uncomfortable or impractical with use. Designers must take into account the design of gesture based systems and ensure that they don't put strain, on users.

The Future of Gesture-Based Interfaces

As technology progresses in vision and AI fields we can anticipate more advanced gesture recognition systems emerging. One area of exploration involves combining recognition with input methods like voice commands and eye tracking. By merging these technologies designers can develop interfaces that enable users to interact with devices in ways. For example a user might issue a voice command to start a task and then use gestures to adjust the specifics or they could utilize eye movements to select an item and swipe to engage with it.

Additionally there are exciting prospects in utilizing devices to enhance gesture recognition. Wearables equipped with sensors can offer data about a users motions leading to more precise and responsive systems. For instance smart gloves could monitor the position of each finger and provide feedback to replicate the feeling of touching an object.

Conclusion

The combination of vision and gesture recognition in how we interact with technology is changing the game. These gesture based systems offer interfaces that could enhance the usability and flexibility of gadgets. However designers need to keep in mind challenges related to accuracy, comfort and user fatigue to ensure these systems work effectively and are easy to use. With advancements in technology on the horizon we can anticipate applications of gesture recognition across fields such as reality and healthcare making the interaction between humans and computers smoother than ever.