Discover the transformative power of language modeling in artificial intelligence. Learn how advanced models enable machines to understand and generate human language, driving innovations in translation, summarization, question answering, and conversational agents. Explore key concepts, methodologies, applications, and future directions in building intelligent systems for language understanding.

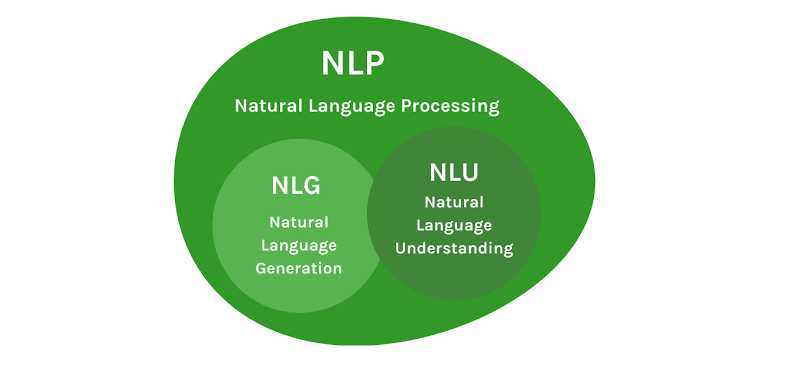

In the realm of artificial intelligence (AI), language modeling is a cornerstone technology that underpins many of the advances in natural language processing (NLP). Language models are at the heart of systems that perform tasks such as translation, summarization, question answering, and conversational agents. These models enable machines to understand, generate, and respond to human language in a way that is increasingly sophisticated and nuanced. This article delves into the intricacies of language modeling, exploring its foundations, methodologies, and applications in building intelligent systems for language understanding.

Understanding Language Modeling

At its core, language modeling involves predicting the probability distribution of word sequences in a language. This prediction can be used to generate text, complete sentences, or even answer questions based on context. The primary goal of a language model is to capture the underlying structure and patterns of a language, enabling it to generate coherent and contextually relevant text.

Language models are trained on large corpora of text data, learning the statistical properties of word sequences. This training process allows the models to understand grammar, syntax, and semantics, which are crucial for producing meaningful and contextually appropriate language. The effectiveness of a language model is often measured by its ability to predict the next word in a sequence given the preceding words, a task known as language modeling.

Types of Language Models

There are several types of language models, each with its own strengths and applications. The choice of model depends on the specific requirements of the task at hand.

1. N-gram Models: N-gram models are among the simplest forms of language models. They predict the next word in a sequence based on the previous n-1 words. Despite their simplicity, n-gram models can capture basic patterns in language but often struggle with long-range dependencies and context.

2. Recurrent Neural Networks (RNNs): RNNs are a type of neural network designed to handle sequential data. They maintain a hidden state that captures information about previous words in the sequence, allowing them to model longer contexts. However, RNNs can suffer from issues such as vanishing gradients, making it difficult to capture very long-range dependencies.

3. Long Short-Term Memory Networks (LSTMs) and Gated Recurrent Units (GRUs): LSTMs and GRUs are specialized types of RNNs that address the vanishing gradient problem. They use gating mechanisms to control the flow of information, enabling them to maintain and update hidden states more effectively. These models have been widely used in language modeling and have achieved significant success in various NLP tasks.

4. Transformer Models: Transformers represent a major breakthrough in language modeling. Unlike RNNs, transformers do not rely on sequential processing and instead use self-attention mechanisms to capture dependencies across the entire sequence. This allows transformers to model long-range dependencies more effectively. Notable transformer-based models include BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), which have set new benchmarks in numerous NLP tasks.

Key Components of Language Models

Building effective language models involves several key components and techniques. Understanding these components is essential for developing and deploying intelligent language systems.

1. Tokenization: Tokenization is the process of breaking down text into smaller units, typically words or subwords. Proper tokenization is crucial for language modeling, as it affects the model's ability to capture meaningful patterns and dependencies. Techniques such as byte-pair encoding (BPE) and WordPiece are commonly used for tokenization in modern language models.

2. Embeddings: Embeddings are dense vector representations of words or tokens that capture their semantic meanings. Pre-trained embeddings such as Word2Vec, GloVe, and fastText have been widely used to initialize language models. More recent models like BERT and GPT learn embeddings as part of their training process, resulting in contextualized representations that capture word meanings in different contexts.

3. Training Objectives: The training objective of a language model defines the task it is trained to perform. Common training objectives include masked language modeling (MLM), where certain words in the input are masked and the model learns to predict them, and autoregressive language modeling, where the model predicts the next word in a sequence.

4. Fine-tuning: Fine-tuning involves adapting a pre-trained language model to a specific task or domain by training it on a smaller, task-specific dataset. This approach leverages the general knowledge acquired during pre-training and refines it to improve performance on the target task.

Applications of Language Modeling

Language modeling has a wide range of applications, driving many of the advancements in NLP and AI. Some of the most notable applications include:

1. Machine Translation: Language models are integral to machine translation systems, enabling them to translate text from one language to another with high accuracy. Models like Google Translate and Microsoft Translator use advanced language modeling techniques to provide real-time translation services.

2. Text Summarization: Automatic text summarization relies on language models to generate concise summaries of longer documents. By understanding the context and key points of a text, language models can produce coherent and informative summaries.

3. Question Answering: Language models are used in question-answering systems to provide accurate and contextually relevant answers to user queries. Models like BERT and GPT have demonstrated exceptional performance in extracting answers from large corpora of text.

4. Conversational Agents: Chatbots and virtual assistants like Siri, Alexa, and Google Assistant use language models to understand and respond to user inputs. These models enable conversational agents to engage in natural and meaningful interactions with users.

5. Text Generation: Language models can generate human-like text for various applications, including content creation, dialogue generation, and creative writing. GPT-3, for example, has been used to generate articles, stories, and even code snippets.

Challenges and Future Directions

Despite their impressive capabilities, language models face several challenges that need to be addressed to further enhance their performance and applicability.

1. Data Bias: Language models can inherit biases present in the training data, leading to biased or harmful outputs. Addressing data bias and ensuring fairness in language models is an ongoing area of research.

2. Interpretability: Understanding how language models make predictions and decisions is crucial for building trust and transparency. Improving the interpretability of these models is a key challenge that researchers are working to address.

3. Generalization: While language models perform well on specific tasks, ensuring their ability to generalize across different domains and contexts remains a challenge. Developing models that can adapt to a wide range of applications is an important goal.

4. Resource Requirements: Training large language models requires significant computational resources and energy. Finding ways to reduce the resource requirements and environmental impact of training these models is an important area of focus.

Looking ahead, the future of language modeling holds great promise. Research is focused on developing more efficient and robust models, addressing ethical concerns, and exploring new applications. Multimodal models that combine text, image, and audio data are also emerging, opening up new possibilities for language understanding and generation.

Conclusion

Language modeling is a fundamental technology in the field of artificial intelligence, enabling machines to understand, generate, and interact with human language. From simple n-gram models to advanced transformer architectures, language models have evolved significantly, driving advancements in natural language processing. With applications ranging from machine translation and text summarization to question answering and conversational agents, language modeling is transforming the way we interact with technology. As research continues to address challenges and explore new frontiers, language models are set to play an increasingly vital role in building intelligent systems for language understanding.