Explore how neural networks are revolutionizing machine translation, enhancing accuracy and fluency in language conversion. This comprehensive guide delves into the evolution of machine translation, the role of neural networks, and the benefits and challenges of modern translation technologies.

Introduction to Machine Translation

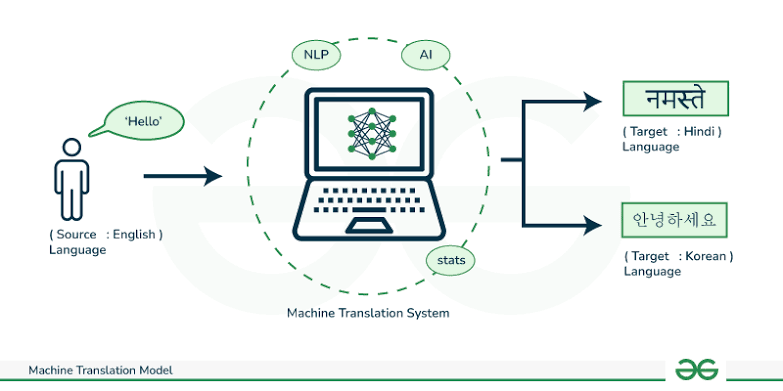

Machine translation (MT) is technology aimed at converting text or speech from one language to another using computational methods. This field of artificial intelligence (AI) has seen remarkable advancements over past few decades. It has evolved from rule-based systems to sophisticated neural network models. The primary goal of machine translation is to bridge language barriers and facilitate communication across diverse linguistic backgrounds. It has become essential tool in today’s interconnected world. Neural networks a subset of machine learning, have significantly advanced MT. They have improved translation accuracy and fluency.

The Evolution of Machine Translation

Machine translation has undergone several stages of development. Each marked by different approaches and technological innovations. Initially MT systems relied on rule-based methods, which used set of grammatical rules and dictionaries to translate text. These early systems were often limited by their inability to handle complex linguistic nuances and contextual variations effectively.

The introduction of statistical machine translation (SMT) marked significant shift. SMT systems utilized statistical models to predict translations based on patterns observed in large bilingual corpora. By analyzing extensive amounts of parallel text. Texts that are translations of each other. SMT systems could identify likely translations and improve accuracy over time. However SMT faced challenges with handling idiomatic expressions and maintaining contextual coherence.

The advent of neural machine translation (NMT) represented a major breakthrough. Unlike earlier methods, NMT employs deep learning techniques and neural networks to model complex linguistic patterns and generate translations. This approach has greatly enhanced translation quality by enabling models to understand context, handle nuances, and produce more fluent and natural-sounding translations.

How Neural Networks Revolutionize Machine Translation

Neural networks have transformed machine translation by providing a more advanced and effective approach to understanding and generating language. At the heart of this transformation are deep learning techniques, particularly sequence-to-sequence (seq2seq) models and attention mechanisms.

Sequence-to-Sequence Models: Sequence-to-sequence models are designed to handle the translation of sequences of text. In NMT, these models consist of two main components: an encoder and a decoder. The encoder processes the input text and converts it into a fixed-size vector representation, capturing its semantic meaning. The decoder then generates the translated text based on this vector representation. This approach allows for better handling of variable-length sentences and complex structures compared to previous methods.

Attention Mechanisms: Attention mechanisms enhance sequence-to-sequence models by allowing the translation model to focus on different parts of the input sentence when generating each word in the output. This mechanism addresses the limitations of fixed-size vector representations by enabling the model to consider the most relevant parts of the input when producing the translation. Attention mechanisms have significantly improved translation accuracy and fluency by capturing contextual information more effectively.

Transformer Models: The introduction of transformer models marked another major advancement in neural machine translation. Transformers use self-attention mechanisms to process and relate different parts of the input sequence simultaneously, rather than sequentially. This approach enables transformers to capture long-range dependencies and complex relationships within the text, leading to more accurate and coherent translations. Models like BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer) are based on transformer architectures and have set new standards in MT performance.

Applications and Benefits of Neural Machine Translation

Neural machine translation has numerous practical applications and offers several benefits over traditional translation methods:

Enhanced Accuracy and Fluency: NMT models produce translations that are more accurate and fluent compared to previous methods. By capturing contextual information and generating more natural-sounding translations, NMT systems can handle complex sentences and idiomatic expressions more effectively.

Real-Time Translation: NMT enables real-time translation in applications such as online communication, travel, and customer support. Real-time translation tools facilitate instant communication between speakers of different languages, breaking down language barriers and fostering global collaboration.

Content Localization: NMT is widely used in content localization, where businesses adapt their products, services, and marketing materials for different linguistic and cultural contexts. Accurate and culturally appropriate translations are essential for effective localization and reaching diverse audiences.

Cross-Language Information Retrieval: NMT improves cross-language information retrieval by enabling users to search for and access content in different languages. This capability enhances access to global information and resources, benefiting research, education, and business.

Text Summarization: NMT algorithms can summarize long documents or articles, providing concise versions that capture essential information, which is useful for information retrieval and consumption.

Challenges and Limitations

Despite its advancements, neural machine translation still faces several challenges and limitations:

Handling Ambiguity and Context: While NMT models have improved context handling, they can still struggle with ambiguous or context-dependent phrases. Ensuring that translations accurately reflect the intended meaning requires ongoing refinement and model training.

Bias and Fairness: NMT models trained on biased data can produce translations that reinforce existing biases or stereotypes. Addressing these issues involves ensuring diverse and representative training data and developing techniques to mitigate bias in translations.

Resource-Intensive Training: Training neural machine translation models requires substantial computational resources and large amounts of data. This resource intensity can limit the accessibility of advanced NMT systems and pose challenges for smaller organizations or languages with limited data availability.

Preserving Cultural Nuances: Translating content across languages involves not only linguistic but also cultural considerations. Ensuring that translations respect and accurately convey cultural nuances is a challenge that requires careful attention to context and audience.

Future Directions

The future of machine translation holds exciting possibilities as researchers continue to explore and develop new techniques. Areas of focus include improving model efficiency, handling low-resource languages, and enhancing the ability to capture subtle cultural and contextual nuances. Advances in multilingual models, transfer learning, and reinforcement learning are likely to contribute to the ongoing evolution of machine translation technologies.

In conclusion, machine translation powered by neural networks represents a significant advancement in breaking language barriers and facilitating global communication. By leveraging deep learning techniques and neural network architectures, modern translation systems offer enhanced accuracy, fluency, and real-time capabilities. While challenges remain, ongoing research and innovation continue to drive progress in the field, promising even greater breakthroughs in the future.