Scene understanding is crucial aspect of enhancing autonomous systems. This includes self-driving cars robotic assistants and advanced surveillance systems. This process involves interpreting and contextualizing objects and their relationships within scene. It enables machines to make informed decisions. They can interact effectively with their environment. To achieve accurate scene understanding, several key elements come into play. These include object recognition spatial reasoning and environmental context.

Scene understanding refers to the ability of a system to perceive and interpret visual environment similar to human understanding. It encompasses recognizing objects, determining their locations and relationships and comprehending the overall context of a scene. This capability is essential for autonomous systems to operate safely and efficiently in dynamic environments. By integrating advanced computer vision techniques with artificial intelligence (AI). These systems can process visual information in real time. This allows them to navigate make decisions and interact with their surroundings autonomously.

Object Recognition and Classification

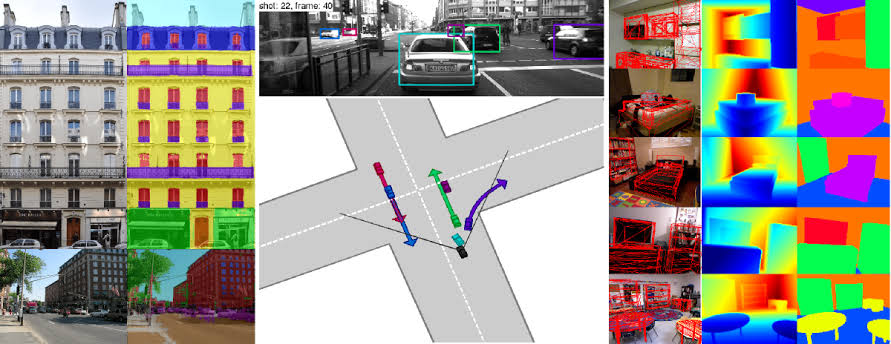

At the core of scene understanding lies object recognition and classification. This process involves identifying objects within an image or video feed and categorizing them into predefined classes. Machine learning models, particularly deep learning algorithms, play a significant role in this task. Convolutional neural networks (CNNs) are commonly used for object detection due to their ability to extract hierarchical features from images. By training these networks on large datasets, autonomous systems can learn to recognize a wide range of objects, from vehicles and pedestrians to traffic signs and road markings.

Object recognition is not limited to identifying individual items but also extends to understanding their roles within a scene. For example, a self-driving car needs to distinguish between different types of vehicles, such as cars, trucks, and bicycles, and assess their behavior to make safe driving decisions. Similarly, a robotic assistant must recognize household items and understand their functions to perform tasks effectively.

Spatial Reasoning and Scene Layout

Beyond recognizing objects, spatial reasoning is essential for understanding the layout and structure of a scene. This involves determining the positions, orientations, and relationships of objects relative to one another. For instance, in a driving scenario, a vehicle must assess the distance between itself and other objects, such as nearby cars or pedestrians, to avoid collisions and navigate safely.

Techniques such as stereo vision, depth sensing, and simultaneous localization and mapping (SLAM) contribute to spatial reasoning. Stereo vision uses multiple cameras to capture images from different angles, allowing the system to estimate the depth of objects and create a three-dimensional representation of the scene. Depth sensors, such as LiDAR (Light Detection and Ranging), provide precise distance measurements, further enhancing spatial awareness. SLAM algorithms help in creating and updating maps of the environment while simultaneously tracking the system's position within that map.

Environmental Context and Scene Interpretation

Understanding the broader context of a scene is crucial for autonomous systems to interpret complex scenarios accurately. Environmental context includes factors such as lighting conditions, weather, and the presence of dynamic elements like moving vehicles or changing traffic signals. Integrating contextual information allows systems to adapt their behavior and make more informed decisions.

For example, in adverse weather conditions, such as rain or fog, the visibility of objects may be reduced. Autonomous systems must adjust their perception algorithms to account for these changes, ensuring accurate object detection and safe navigation. Additionally, understanding contextual cues, such as traffic flow patterns or pedestrian behavior, enables systems to respond appropriately to varying situations, such as yielding to pedestrians at crosswalks or adjusting speed based on traffic conditions.

Challenges in Scene Understanding

While significant progress has been made in scene understanding, several challenges remain. One major challenge is achieving robustness and reliability in diverse and unpredictable environments. Autonomous systems must handle variations in lighting, weather, and occlusions, which can affect the accuracy of object recognition and spatial reasoning.

Another challenge is dealing with the complexity of real-world scenes. Scenes often contain numerous objects with varying sizes, shapes, and movements, making it difficult for systems to process and interpret all relevant information simultaneously. Ensuring that autonomous systems can accurately and efficiently handle such complexity requires ongoing research and development in computer vision and AI.

Future Directions and Developments

The future of scene understanding holds promising advancements driven by continuous improvements in technology and research. Emerging techniques in deep learning, such as transformer-based models and generative adversarial networks (GANs), offer new possibilities for enhancing object recognition and scene interpretation. These models can learn more complex relationships between objects and better generalize to unseen scenarios.

Additionally, advancements in sensor technology, such as higher-resolution cameras and more sophisticated depth sensors, will contribute to improved spatial reasoning and scene perception. Integrating multimodal data from various sensors, such as visual, auditory, and tactile inputs, can further enhance scene understanding and provide a more comprehensive view of the environment.

Furthermore, interdisciplinary research involving cognitive science and neuroscience may lead to novel approaches for mimicking human-like scene understanding. By studying how humans perceive and interpret visual information, researchers can develop more intuitive and effective algorithms for autonomous systems.

Conclusion

Scene understanding is a critical component of autonomous systems, enabling them to navigate, interact, and make decisions based on their visual environment. By combining object recognition, spatial reasoning, and contextual interpretation, these systems can achieve a high level of situational awareness and operate effectively in diverse scenarios. While challenges remain, ongoing advancements in technology and research continue to drive progress in scene understanding, paving the way for more capable and intelligent autonomous systems in the future.