Explore the top 10 explainable AI (XAI) solutions that enhance the transparency and interpretability of machine learning models. This guide covers methods such as LIME, SHAP, Grad-CAM, and more, highlighting their features, applications, and benefits for creating understandable and accountable AI systems.

Top 10 Explainable AI (XAI) Solutions

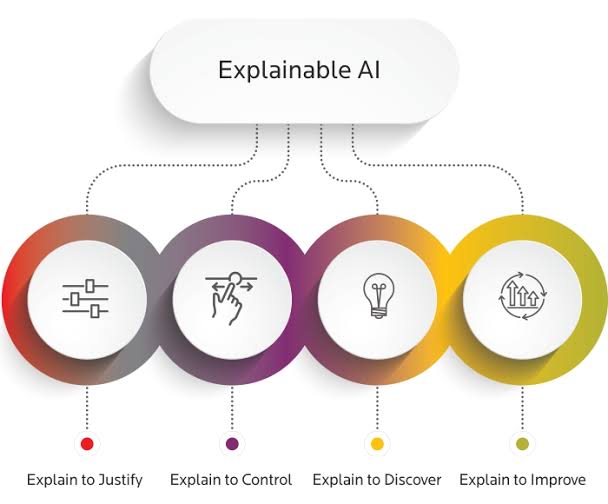

Explainable AI (XAI) is a branch of intelligence that focuses on making machine learning models and their decision making processes more comprehensible for humans. With the increasing complexity of systems it's vital to understand how they arrive at their conclusions to ensure trust, transparency and accountability. XAI aims to tackle the "black box" issue associated with traditional AI models which often function without revealing their inner workings. This detailed guide explores the ten explainable AI solutions, showcasing their features, applications and advantages.

1. **LIME (Local Interpretable Model agnostic Explanations)**

LIME is an AI tool that offers explanations for predictions made by machine learning models. It does this by creating a simpler model that approximates the complex one near the prediction in question. This method allows users to see how different factors impact a particular decision. LIME can be used with machine learning algorithms making it a flexible tool for clarifying model results in various fields.

2. **SHAP (SHapley Additive exPlanations)**

SHAP is a popular method in the field of explainable AI that offers clear and understandable explanations for individual predictions. Derived from theory, SHAP assigns a value to each feature based on its contribution to a prediction. This ensures that the explanations are fair and consistent by considering how features interact with each other. SHAP is applicable to machine learning models and is particularly useful for analyzing complex data.

On the other hand Grad CAM (Gradient weighted Class Activation Mapping) is a technique used to interpret convolutional neural networks (CNNs) in image classification. It provides visual explanations by highlighting areas of an image that significantly impact the models prediction. Grad CAM utilizes information to create class activation maps helping users comprehend which image regions influenced the models decision. This technique is valuable in computer vision where visual interpretability is crucial.

4. **Integrated Gradients**

Integrated Gradients is a method used in AI that seeks to accurately attribute the impact of different features on the predictions made by deep learning models. It achieves this by calculating the gradients of the models output concerning the input features. This is done by following a path from a baseline input to the actual input. By doing so it can assess how much each feature contributes to the models decision. Integrated Gradients is especially beneficial for neural networks due to its reliability and capability to work with models.

5. **Feature Importance**

Feature importance is a simple but effective way to grasp how certain attributes impact a machine learning models predictions. By assessing the influence of feature on the models effectiveness this method pinpoints the variables that hold significance. There are ways to calculate feature importance such as using methods like permutation importance and mean decrease in impurity. This technique is frequently applied in tree based models such as random forests and gradient boosting machines.

6. **Partial Dependence Plots (PDPs)**

Partial Dependence Plots (PDPs) are graphical representations that showcase the connection between a specific feature and the predicted outcome while keeping other features unchanged. These plots assist users in grasping how modifications in a feature influence the models predictions. By illustrating the impact of a feature on the predicted result PDPs offer insights into feature interactions. This method proves beneficial for gaining insights into the influence of features within complex models.

ICE (Individual Conditional Expectation) Plots take the concept of PDPs further by demonstrating how data points are impacted by alterations in a feature. Unlike PDPs that show an average effect across the dataset ICE Plots reveal the variations in predictions for observations. This approach aids in identifying potential differences in feature impacts and uncovering patterns that may not be apparent in effects. ICE Plots are valuable for comprehending how different data points interact with model predictions.

8. **Decision Trees**

Decision Trees are easy to understand machine learning models that offer clear decision making guidelines. Each branch in a decision tree corresponds to a choice based on feature values eventually leading to an outcome at the leaf nodes. The design of decision trees enables users to follow the decision making process and grasp the logic behind predictions. Although decision trees might not excel at handling relationships compared to models their straightforwardness renders them a useful asset for AI.

9. **Rule-Based Models**

Rule based models employ clear if then rules for making predictions providing transparency. These models create a set of rules based on feature values and their combinations to categorize or predict data. Rule based models are user friendly and easy to explain since the rules that drive the predictions are easily visible. This method is especially valuable in fields where openness and clarity are essential like finance and healthcare.

10. **Counterfactual Explanations**

Counterfactual explanations offer a glimpse into the modifications required in an input to change a models prediction. By illustrating situations where input attributes are altered these explanations assist users in comprehending how inputs influence results. This approach proves useful for delivering insights and aiding users in understanding the relationship between feature variations and predictions.

Conclusion

In the world of learning, explainable AI plays a role, in fostering trust and ensuring clarity in how these models operate. The top ten solutions for explainable AI such as LIME, SHAP, Grad CAM, Integrated Gradients, Feature Importance, PDPs, ICE Plots, Decision Trees, Rule Based Models and Counterfactual Explanations offer a range of approaches to simplify and clarify AI models. Each method comes with its own set of strengths and applications serving as valuable tools across various industries. By integrating explainable AI techniques organizations can boost the transparency of their AI systems, build user confidence and make well informed decisions based on insights from the models.